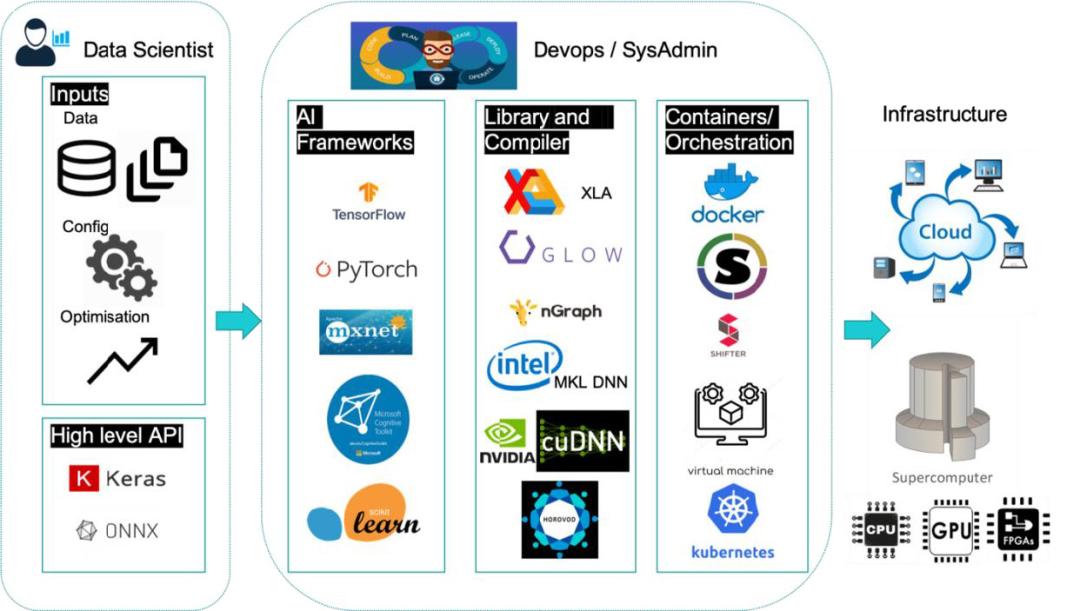

Graph compilers optimises the DNN graph and then generates an optimised code for a target hardware/backend, thus accelerating the training and deployment of DL models. We have reviewed a number of compilers, including XLA, TC, TVM, ONNC, GLOW, Intel nGraph, PlaidML, and TensorRT.

The XLA [1] (Accelerated Linear Algebra) compiler accelerates linear algebra computations in TensorFlow models and achieves a 1.15x speedup when enabled on standard benchmarks.

Tensor Comprehensions (TC) [3] aims to improve performance of custom new operators that are not yet fully supported. It provides a mathematics-like language to represent operators, using polyhedral JIT compilation and autotuning. TC supports Caffe2 and PyTorch and mainly focuses

on optimisation across operators, and for data layout and size. TC has been evaluated on multiple popular kernels and achieves up to 4x speedup compared to Caffe2 + CUBLAS.

The TVM [2] compiler is based on Halide’s compute/schedule separation concept. TVM introduces a new tensor expression language (DSL) to construct tensor operators, which is then converted to optimised kernels for different target hardware. TVM currently supports TensorFlow, MXNet, PyTorch, Keras, and CNTK on CPUs, GPUs, and FPGAs (embedded and server). It achieves portable performance of about 1.2x to 3.8x.

ONNX (Open Neural Network Exchange) is a standard open format for defining and representing deep learning models. This allows AI developers to port models across DL frameworks or use combinations that best suit their needs. This community project, created by Facebook and Microsoft, has gained support by a number of industry partners. ONNC[5] (Open Neural Network Compiler) is a retargetable compiler (built on top of LLVM) that supports compiling ONNX based models to any supported hardware like CPU, GPU, FPGA, DSP.

GLOW [4] optimises Neural Networks by lowering the graph to two intermediate representations. Glow works with PyTorch and supports multiple operators and targets. Glow can consume ONNX (open standard for serializing AI model) as an input and thus can support other frameworks.

GLOW has been found to offer 2.7x speedup over TensorFlow (with XLA) and 1.3x over TVM (no autotuning) for ResNet50.

Intel nGraph is an end-to-end compiler for training and inference and supports TensorFlow, MXNet, ONNX, and PaddlePaddle. nGraph can deliver as much as a 45x increase in normalized inference throughput leveraging MKL-DNN on Intel Xeon Scalable processors.

PlaidML, a compiler for deep learning, is also available as a component of the Intel nGrpah compiler stack. PlaidML supports Keras, ONNX, and nGraph, and accelerates by auto generating tiled code with performance comparable to CUDA on NVIDIA GPUs. This is useful mainly for supporting new kernels that are not supported by libraries.

NVIDIA’s TensorRT compiler is built on top of CUDA and optimises inference by providing high throughput and low latency for deep learning inference applications. TensorRT supports ONNX, thus by extension supporting models trained by different frameworks. Providing optimisations based on reduced precision, these TensorRT models are found to provide 40x faster performance compared to CPU-only platforms during inference.